About as common as unjustified fears of AI overlords enslaving the human race, are blanket defenses of AI technology as something inherently good, ethical, and safe. In short, we supposedly have nothing to worry about. While I find it admirable to want to paint a more accurate portrait of what AI is and isn’t, neither extreme represents the truth. Like any technology, AI presents both benefits and new dangers, and it’s only through recognizing these dangers and consciously working to avoid them that we can hope to use AI responsibly, in service of the common good.

With wide proliferation of AI technology come serious concerns about privacy, personal data, and public safety. In the coming weeks, we’ll be looking at a few of these areas of concern, not in an effort to stoke fears but rather to inform, in hopes of helping organizations and individuals alike move forward responsibly with their AI projects.

Why The Long Face(book)?

In a recent blog on compliance, we touched on the potential for HIPAA violations that AI systems present and some of the consequences your organization faces (fines, lawsuits, audits) when found non-compliant. We didn’t, however, delve into the consequences your customers/clients will face should you fail to protect their Personal Health Information (PHI), some of which can be far more serious than financial losses. One’s medical history is some of the most closely guarded information out there, and with good reason: it often concerns, quite literally, matters of life and death. Surely, even the most laissez-faire among us would have qualms about corporations gaining knowledge of the inner-workings of our bodies or our mental health.

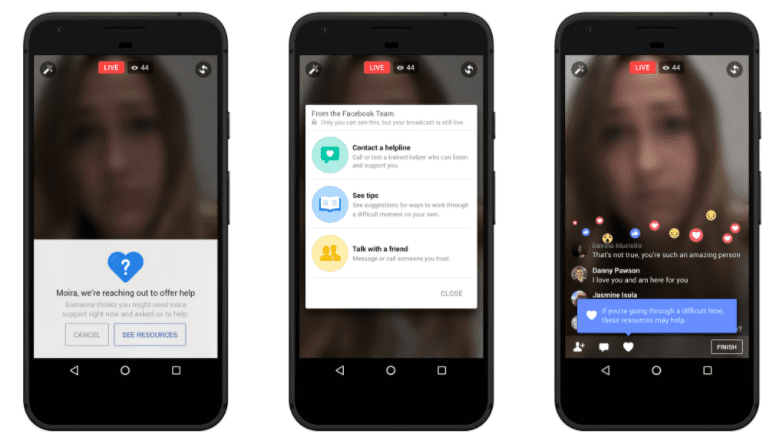

While regulatory bodies like HIPAA exist, no law is perfect, and many pieces of information most would consider sensitive fall outside HIPPA’s purview. Case in point: Facebook’s 2017 project to predict suicidal intentions on the part of its users. A part of what the company describes as an “ongoing effort to help build a safe community on and off Facebook,” the suicide detection initiative uses algorithms and data analysis to detect at-risk users. Once flagged, cases are reviewed by Facebook employees to assess the level of danger a user may present to themselves.

A running theme of this blog series will be the tension between an organization’s intentions with regard to using personal data and problems that may arise regardless of intent. No one would argue that suicide prevention is a worthy goal, but is any social media company equipped to address mental health crises, even obliquely? And once a user has been flagged as at-risk, what happens to this data? Facebook’s infamous lack of transparency about how, why, and for how long user data is stored casts these questions and their possible answers in a sinister light.

23andMe (And Anyone Else Who Wants Your Data)

Facebook and other social media companies are hardly the only ones who engage in troubling uses of health-related information. Consumer genetic testing sites like 23andMe, Ancestry, and Helix oversee a vast reservoir of genetic data, none of which is covered by HIPAA, as they aren’t technically health companies or providing medical services. However, as evidenced by 23andMe’s recent partnership with pharma conglomerate GlaxoSmithKline, the genetic data these companies collect doesn’t stay with them and is often sold off to partners (and others). And while they may take steps to remove incriminating data from samples, it’s not that difficult, as pointed out by Dr. Anil Aswani, to sniff out a person’s identity when you have their entire genetic code on file.

Facebook and other social media companies are hardly the only ones who engage in troubling uses of health-related information. Consumer genetic testing sites like 23andMe, Ancestry, and Helix oversee a vast reservoir of genetic data, none of which is covered by HIPAA, as they aren’t technically health companies or providing medical services. However, as evidenced by 23andMe’s recent partnership with pharma conglomerate GlaxoSmithKline, the genetic data these companies collect doesn’t stay with them and is often sold off to partners (and others). And while they may take steps to remove incriminating data from samples, it’s not that difficult, as pointed out by Dr. Anil Aswani, to sniff out a person’s identity when you have their entire genetic code on file.

The Consequences of Carelessness

In addition to invasion of privacy, using and disseminating personal health/genetic data can have very real, very serious consequences. In the case of Facebook’s suicide detection efforts, personal mental health information could possibly be weaponized by employers should an employee seek some kind of accommodation. Though employers are required by law to make reasonable accommodations, in the real world, this often doesn’t happen, leaving employees to suffer through hostile working conditions or risk losing their job.

As for 23andMe et. al., the risks of disseminating genetic data are even greater. Say a person pays for a DNA test and discovers they possess a gene that indicates a predisposition to an incurable illness that tends to strike in early middle age. Now say that person’s genetic data is sold off and, at some point down the line, falls into the hands of an insurance company or companies. What’s to stop an unscrupulous company from denying this at-risk person insurance coverage? Thanks to the Genetic Information Nondiscrimination Act (GINA), this hypothetical person could not be denied medical coverage based on their predisposition. Other types of insurance, however, fall outside of GINA’s jurisdiction, meaning they could be denied life insurance, leaving any spouse, children, and/or other dependents in the lurch in the event of their early death.

A Model Use of AI

While the above situations are certainly cause for concern, they are hardly inevitable. AI technology is not a weapon, but instead a tool. Like any tool, it must be used responsibly to keep the person using it and those around them safe—a skill saw can take a thumb just as easily as it can cut a two by four. But when wielded responsibly, the benefits are innumerable. Even a subject as fraught as AI and DNA doesn’t have to be a nightmare: the South African insurance company, Discovery, for example, hopes to use genetic testing to provide better care for patients and incentivize healthy living. Upon completing a DNA test, Discovery’s clients will, according to a report in Financial Times, “receive a comprehensive report about their genome, including disease risks and potential strategies to improve health, based on their DNA.” Rather than denying coverage or turning a profit off the sale of people’s most intimate data, Discovery has opted to use AI in ways that enrich and extend patient lives.

Ultimately, AI can be used in a variety of ways to help healthcare organizations deliver engaging, secure, and personalized experiences that allow teams to focus less on complex administrative processes, and more on enhancing patient care. Here’s an infographic with some information about how AI is being used to re-humanize the healthcare industry:

To Be Continued…

We hope this week’s discussion has provided some food for thought, and perhaps some guidance, as you or your organization moves forward with use of AI. While no system will ever be perfect, it’s essential that we continually assess the ethicality of our AI-powered platforms and how they affect both our customers and the world at large.

HELPFUL RESOURCES

– Free Digital Agent Guide: Get The Guide

– See AI In Action: Request a Demo